Paper Overview

Today, many e-commerce websites personalize their content, including Netflix (movie recommendations), Amazon(product suggestions), and Yelp (business reviews). In many cases, personalization provides advantages for users: for example, when a user searches for an ambiguous query such as "router", Amazon may be able to suggest the woodworking tool instead of networking devices. However, personalization on e-commerce sites may also be used to the user's disadvantage by manipulating the products shown (price steering) or by customizing the prices of products (price discrimination). Unfortunately, today, we lack the tools and techniques necessary to be able to detect such behavior.

Experiments

Experiment 1

The purpose of the first experiment was to find out the prices and order of products for real people. We accomplished this by setting up an experiment with Amazon Mechanical Turk (AMT). The turkers were instructed to configure their browser to send all traffic through our proxy for data collection purposes and then go to a website we created that would load all of the pages we wanted. Once each page loaded in the browser, we were able to store the html on our server for further processing.

Experiment 2

The purpose of the second experiment was to find out why personalization was occuring. We achieved this by writing phantomjs scripts to make requests to the sites. The reason for using phantomjs is that we have complete control. This means we can have fake users and give those users specific attributes(like browser, cookies, device). By having complete control we can find out what causes personalization.

Overall Results

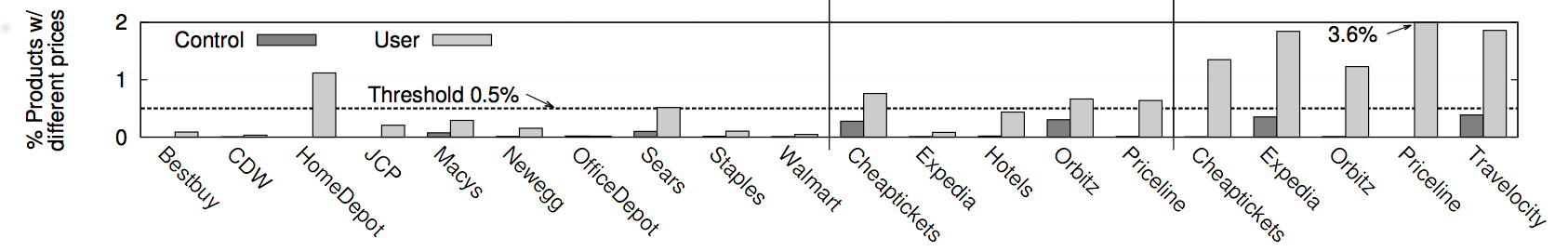

After analyzing the data we collected we noticed that most sites had some form of personalization. The graph below shows each site we experimented on and the % of products which has a price difference between our control data set and the AMT data set. The section on the left is for e-commerce sites, the middle are hotels, and the right are rental cars.

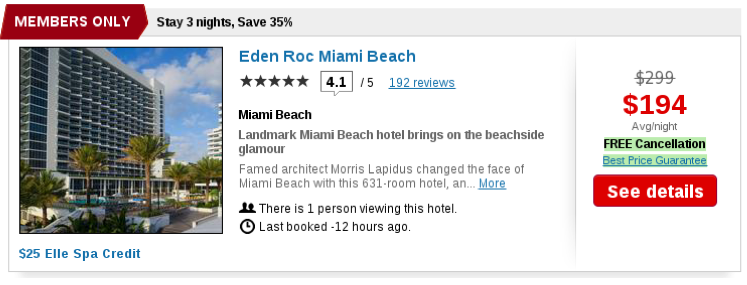

Part of the experiments we ran included saving every page that was loaded so we were able to go through and find examples where prices were different. The pictures below show one of many instances we saw. The hotel shown on the left is the price that the AMT user recieved while the one on the right is what our control and comparison experiments recieved.

We noticed another difference on Travelocity where a member was given a lower price than a non member. The image below to the left shows the hotel shown to the member and on the right the same hotel with a higher price for the non member.

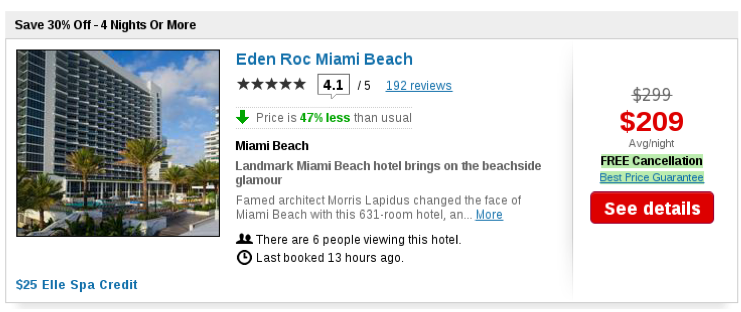

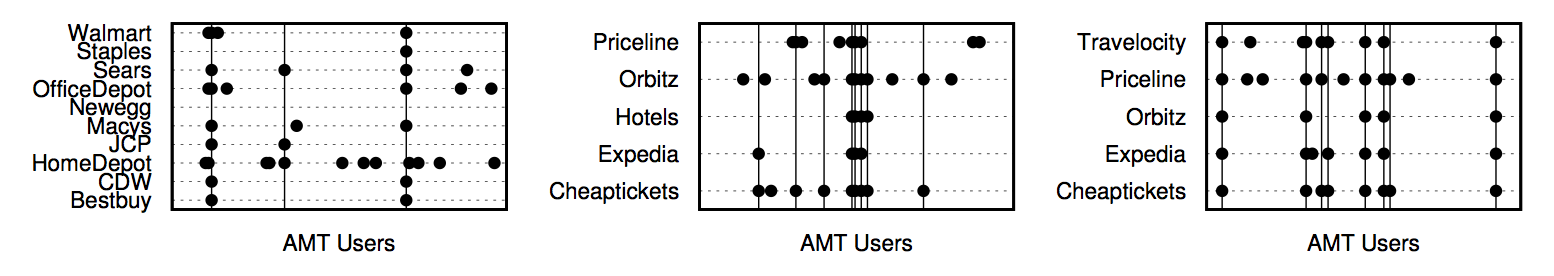

There is an interesting pattern that we noticed for all the AMT users. Not every user had personalization of any kind, but the ones that did were more likely to have personalization on other sites. The image below shows this pattern. The x-axis of each graph represents each individual AMT user and the dots show that the coorsponding user had personalization for the site on the y-axis.

Cheaptickets and Orbitz

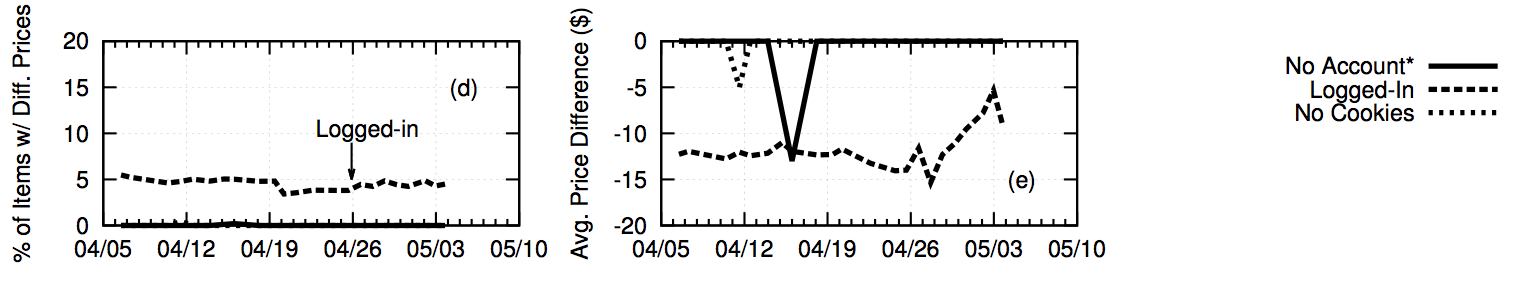

The first sites that we examine are Cheaptickets and Orbitz. These sites are actually one company, and appear to be implemented using the same HTML structure and server-side logic. In our experiments, we observe both sites personalizing hotel results based on user account.

Below, we have a couple graphs showing price discrimination on Cheaptickets. The graph on the left shows the percent of items with different prices. This graph shows that logged in users will recieve different prices on about 5% of hotels. The graph on the right illustrates the differences in price and we find that hotels with inconsistent prices are $12 cheaper on average.

Hotels.com and Expedia

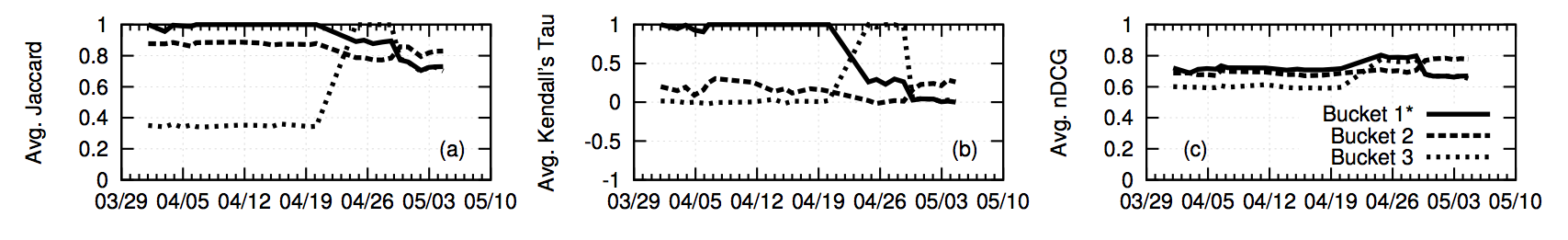

Our analysis reveals that Hotels.com and Expedia implement the same personalization strategy: randomized A/B tests on users. Since these sites are similar we are just going to focus on Expedia. The results we found led us to suspect that Expedia was randomly assigning each of our treatments to a “bucket”. This is common practice on sites that use A/B testing: users are randomly assigned to buckets based on their cookie, and the characteristics of the site change depending on the bucket you are placed in.

The image below shows a couple results. The first being that we discover users periodically being shuffled into different buckets and the second being that Expedia is steering users in some buckets towards more expensive hotels.

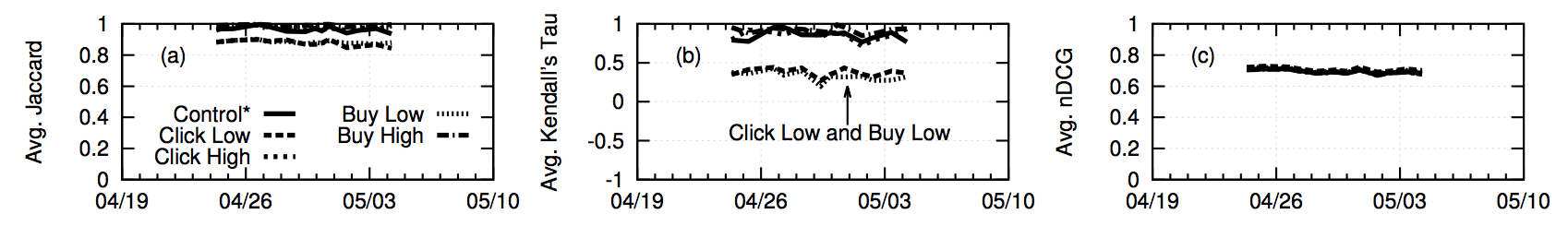

Priceline

When analyzing Priceline, we discovered they alter hotel search results based on the user's history of clicks and purchases. Users who clicked on or reserved low-priced hotel rooms receive slightly different results in a much different order, compared to users who click on nothing, or click/reserve expensive hotel rooms. We manually examined these search results but could not locate any clear reasons for this reordering. Although it is clear that account history impacts search results on Priceline, we cannot classify the changes as steering.

The image below shows how Priceline alters hotel search results based on a users's click and purchase history.

Travelocity

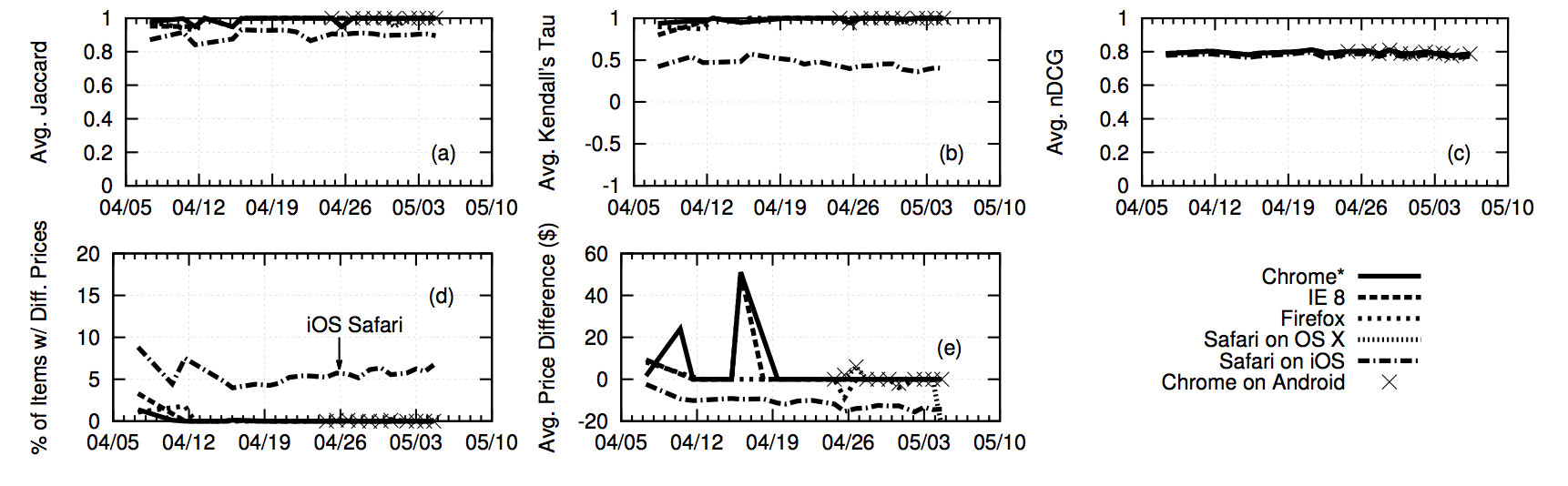

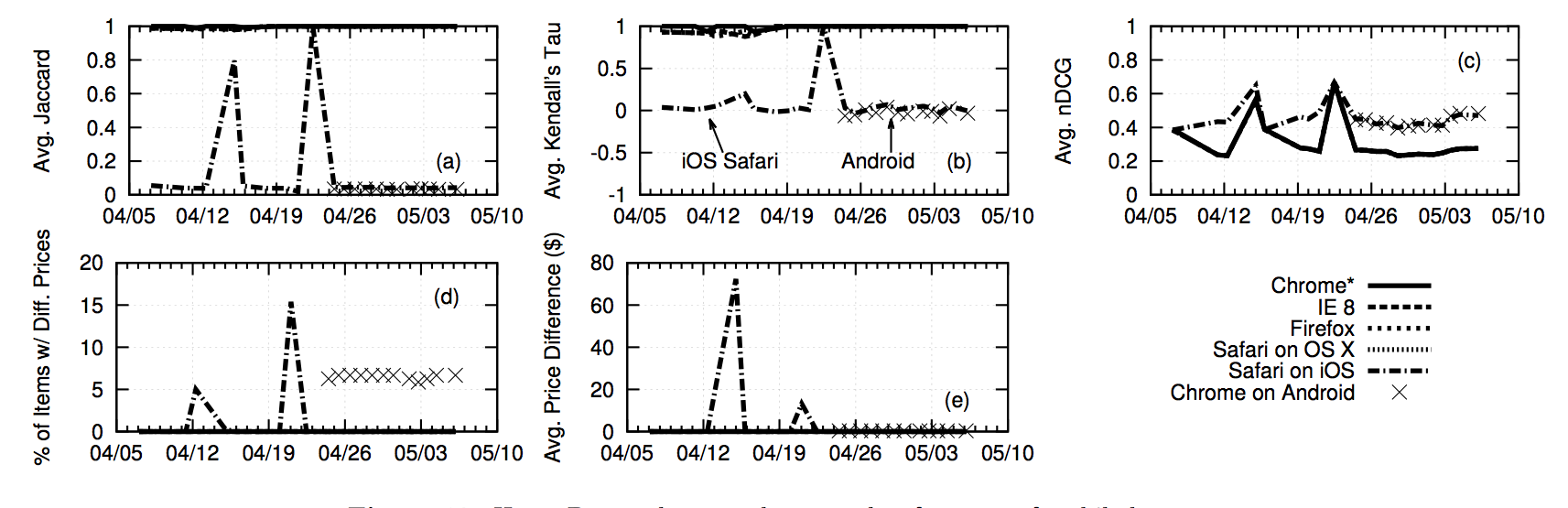

For Travelocity, we discovered that they alter hotel search results for users who browse from iOS devices. The image below shows that users browsing with Safari on iOS receive slightly different hotels, and in a much different order, than users browsing from Chrome on Android, Safari on OS X, or other desktop browsers. The takeaway from the image below is that we observe evidence consistent with price discrimination in favor of iOS users on Travelocity. Unlike Cheaptickets and Orbitz, which clearly mark sale price “Members Only” deals, there is no visual cue on Travelocity’s results that indicates prices have been changed for iOS users.

Home Depot

Among the 10 retailers we ran experiments on, we only discovered evidence of personalization on Home Depot. Similar to our findings on Travelocity, Home Depot personalizes results for users with mobile browsers. In fact, the Home Depot website serves HTML with different structure and CSS to desktop browsers, Safari on iOS, and Chrome on Android.

The image below depicts the results of our browser experiment on Home Depot. Strangely, Home Depot serves 24 search results per page to desktop browsers and Android, but serves 48 to iOS. We discovered the pool of results served to mobile browsers contains more expensive products overall, leading to higher nDCG scores for mobile browsers. Note that nDCG is calculated using the top k results on the page, which in this case is 24 to preserve fairness between iOS and the other browsers. Thus, Home Depot is steering users on mobile browsers towards more expensive products.

In addition to steering, Home Depot also discriminates against Android users. We discovered that Android users consistently see differences on about 6% of prices(1 or 2 of the 24). However, the practical impact of this discrimination is low: the average price difference is about $.41.

Company Responses

Prior to the public release of our paper, we contacted all of the companies involved so that they were aware of our research and findings. Below are the responses we recieved from companies that we are allowed to make public. It should be known that the final version of the paper addresses the concerns from the responses we recieved.

Orbitz

Prior to the publication of our paper we sent this email to Orbitz:

Hello, My name is Christo Wilson, and I am a professor at Northeastern University. Recently, my colleagues and I have been researching the algorithms used by major websites -- including Cheaptickets and Orbitz -- to personalize content for their users. In particular, we have just concluded a study examining the personalization algorithms used by e-commerce sites that determine what products and prices users receive when they search on these sites. Our experiments demonstrate that Cheaptickets and Orbitz personalizes search results for users. Specifically, the prices of some products are consistently altered for a subset of users on the site. This is commonly referred to as price discrimination. I have attached a pre-publication version of our paper. Our paper has been accepted at the Internet Measurement Conference 2014 (http://conferences2.sigcomm.org/imc/2014/) and will be publicly released in early September. I am reaching out to you for any comments or concerns you may have about the draft of our paper. There is still a chance to edit the manuscript before it is publicly released, and we would appreciate any feedback you have on our results. Thank you for your time. Christo Wilson Assistant Professor College of Computer Science Northeastern University http://www.ccs.neu.edu/home/cbw/The pdf below is the response we got, along with several other supporting documents supplied by Orbitz.

- Orbitz response

- Orbitz.com Members Only news release June 2011

- Online travel booking mtd (Exhibit 2)

- USAToday Travel Blog Thursday

- USAToday Travel Blog Wednesday

Expedia

We spoke on the phone with John Kim, Chief Product Officer and Dan Friedman, Senior Director of Stats Optimization at Expedia. They confirmed our findings that Expedia and Hotels.com perform extensive A/B testing on users. However, Mr. Friedman stated that Expedia does not implement price discrimination on rental cars, which does not agree with the results of our study. After speaking with the representatives from Expedia, we went back and double checked our results from Expedia, and confirmed that real users see altered prices on rental cars at a significantly higher frequency than our experimental controls. Anyone wishing to confirm our results is welcome to download our data and analysis scripts from the bottom of the page.

Conclusion

To summarize our findings: we find evidence for price steering and price discrimination on four general retailers and five travel sites. Overall, travel sites show price inconsistencies in a higher percentage of cases, relative to the controls, with prices increasing for AMT users by hundreds of dollars. Finally, we observe that many AMT users experience personalization across multiple sites.

Data

Each zip file below contains all of the synthetic test data for a company and product type. Unfortunately, we are unable to release the AMT data due to privacy concerns.

Code

Our code can be found here.

Included are a few different things. Below are examples of how to run each script so that you can replicate our experiments.

-

Run

python run_stores.pyto start collecting data. This script sets up the SSH tunnels and executes all of the experimental treatments by running PhantomJS instances.run_spenders.pyis the same, but tailored specifically for the purchase history treatments. -

Run

python compare_*.py [company] [results HTML 1] [results HTML 2]to compare two pages of results. An example usage ispython compare_cars.py expedia nyc_1.html nyc_2.html. -

Run

python analyze_*.pyto go through html files and extract the prices on the pages and get metrics about them.ndcg_*.pydoes the same thing, but outputs the nDCG numbers instead. -

Run

gnuplot.pyto collect all of the analysis output and generate plots. -

bucket_table.pyandclear_cookies.pyare scripts related to identifying A/B test buckets for Expedia and Hotels.com